On 20 January 2025, just hours after his inauguration, US President Donald Trump revoked a key executive order on the regulation of artificial intelligence (AI) issued by his predecessor, President Joe Biden. At a time when AI applications ranging from chatbots in customer service to automated systems for personnel decisions have long become part of everyday life, the move is causing new turbulence in the global AI discourse.

While Biden had attempted to establish clear guidelines and safety standards for AI companies with Executive Order 14110, Trump’s move opens the door to a more relaxed approach in the US. Major tech companies such as Meta, OpenAI, Google and Microsoft welcome the removal of what they see as overly strict reporting and disclosure requirements. But critics warn of far-reaching consequences: A lack of safety checks and transparency could accelerate innovation in the short term, but undermine trust in AI systems in the long term and lead to unwanted side effects.

This development on the other side of the Atlantic comes at a time when Europe is taking a different, more regulated approach: The first parts of the EU’s AI Act – also known as the AI Regulation – will become binding from February 2025. This means that many European companies are facing the crucial question of how to deal with artificial intelligence in a responsible, compliant, yet innovative way. While US companies may soon enjoy a perceived “competitive advantage” by being able to test AI freely and without major restrictions, the European Union is taking the path of stricter regulation and compliance requirements.

Many may remember the excitement surrounding the General Data Protection Regulation (GDPR), which came into force in 2018. With the EU AI Act, businesses are once again facing a profound disruption that could have a similarly far-reaching impact as the GDPR.

This article outlines which parts of the EU AI law will be relevant from February 2025, how the new legislation is structured and what specific obligations companies will face. AI is no longer an abstract concept for the future but has long since become integral to our working world. This makes it all the more important to get clear guidance now: Which AI systems are prohibited? What are high-risk systems? How can teams prepare for the new requirements? Most importantly, what does the mandatory training from 2 February 2025 mean?

EU AI Act – European Union response to the global AI race

Donald Trump’s recent decision to overturn Biden’s AI executive order reflects a familiar dilemma: how to balance innovation and regulation. Whereas Joe Biden had sought to tighten controls on the development of new AI systems, for example, to prevent discrimination and bias, Trump’s measures now open the door to faster but less supervised progress. The US National Institute of Standards and Technology (NIST), which was previously tasked with guidelines and best practices, will no longer have the same authority to force companies to disclose their AI models.

This deregulation could reignite the debate in Europe, where a stricter course is already being pursued, as with the General Data Protection Regulation (GDPR). While the US is not necessarily looking to remove all controls, the trend towards less government intervention is clear. This contrasting starting point sets the framework for the coming months and years: unchecked AI growth in the US versus a regulated AI landscape in the EU

History of the EU AI Act

In Europe, the idea of an AI regulation has already taken shape by 2021. The rapid development of powerful language models such as GPT-3 and GPT-4, which caused a sensation in 2022 and 2023, brought AI applications even more into the focus of EU lawmakers. By early 2024, the time had come: all 27 ambassadors of the EU member states agreed to the draft and the European Parliament finally gave the green light (latest developments and analysis on EU AI legislation)

Why the rush?

The EU has been competing with the technological superpowers of the US and China for years. The AI Act not only aims to create an ethical framework, but also to ensure Europe’s competitiveness. Similar to the General Data Protection Regulation (GDPR) a few years ago, this new regulation could set global standards and prompt other regions of the world to create or adapt their regulations.

Who is affected?

The EU AI Act is aimed at a wide range of stakeholders, from small start-ups developing AI-based tools to large technology companies and end-users in a variety of sectors. Be it a fashion retailer offering AI-based styling recommendations or a medium-sized company using AI to select candidates: All companies that use AI in any form will be affected by the regulation in the future. However, according to a German Bitkom survey, only a quarter of German companies have even heard of the new AI regulation, highlighting the urgent need for action.

What exactly are the new AI regulations?

Here is a first look at the bans and transition periods: The first bans will take effect from February 2025. AI systems that pose an “unacceptable risk” (Art.6.) will be banned immediately.

This includes, for example:

- Social Scoring: AI scoring people based on personal characteristics or social interactions.

- Automated real-time biometric identification in public spaces (e.g. facial recognition in supermarkets).

- Emotion recognition systems in sensitive areas such as schools or the workplace, where they can lead to manipulation or discrimination.

The gradual introduction of prohibitions and requirements is also reminiscent of the GDPR process. Back then, many companies found it difficult to scrutinise their data processing processes in a short period of time. AI systems are now being put to the test in a similar way. Some aspects of the AI Act won’t come into full effect until 2026. But if you don’t get to grips with the details by then, you’ll have lost a lot of time.

Risk classes instead of a blanket ban – the “risk-based” model

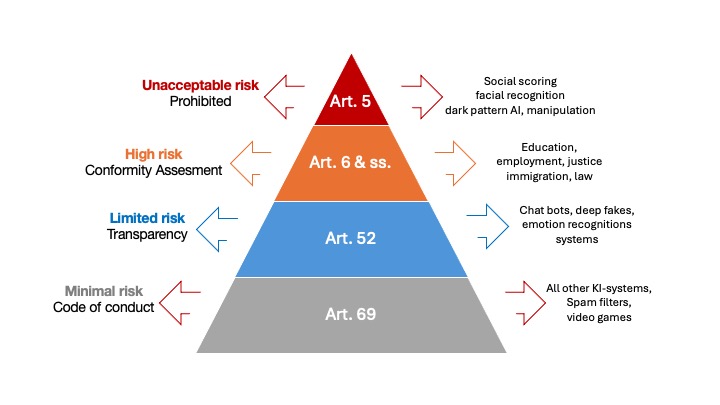

Much of the discussion in the EU AI law revolves around so-called high-risk systems. A risk categorisation of AI systems is central.

This includes AI solutions for HR processes (recruitment, performance assessment), safety-critical infrastructure and education. Four levels are distinguished (see illustration):

- Unacceptable risk: These AI systems will be completely banned from February 2025. This includes social scoring, automated real-time biometric identification in public spaces, or systems that could psychologically manipulate people or violate their fundamental rights.

- High risk: Although these systems are not banned, companies must meet strict requirements to use these AI tools legally. This includes, for example, AI applications in recruitment and performance assessment, in safety-critical infrastructure, or education and healthcare.

- Limited risk: This primarily involves transparency obligations; it must be disclosed that AI is being used, for example in chatbots that generate automated responses (such as simple product information).

- Minimal risk: This covers most common, everyday AI applications – for example, simple text recognition systems, spam filters or image processing tools that do not pose a high risk potential. There are generally no specific requirements.

Examples:

- A company using AI software to select new employees may fall into the high-risk category and must comply with documentation and reporting requirements.

- Fashion retailers or e-commerce platforms that provide automated styling recommendations are unlikely to be high risk. However, if personal data is heavily mined, for example to assess creditworthiness or recognise emotional patterns, the rules can be much stricter.

“High-risk systems a the linchpin for many companies“

The basic idea behind this risk classification is that the level of compliance requirements (see the Future of Life Institute’s Compliance Checker for more information) is based on the potential damage to health, safety or fundamental rights. The focus is therefore on AI systems that manipulate people, violate their fundamental rights or put them at an unreasonable disadvantage. “Unacceptable risk” means that such AI technologies will not be given the green light, regardless of their potential benefits for productivity or economic growth. Companies will have to assess for themselves which category their AI application falls into – this is what the legislator will require when the regulation comes into force.

Competence and transparency – two important requirements

From 2nd February 2025, Article 4 of the AI Regulation will require that all employees involved in AI have a “sufficient level of AI competence”. This is not just an empty phrase, but a real obligation. Business leaders will need to ensure that their teams are using AI in a compliant and competent manner. Failure to do so could result in financial penalties or civil liability.

Article 4 of the AI Regulation: Take training obligations seriously

This is where the training obligation comes in, which will apply from February 2, 2025. Employees must have sufficient AI skills when working with the relevant tools. This ranges from simple application training to complex legal and ethical issues. This includes:

- Laying the foundations: Conducting introductory seminars where employees learn what AI can do and what its limitations are.

- Specialised training: Anyone implementing or further developing AI systems needs in-depth knowledge of data security, algorithms and legal requirements.

- Documentation: Keep a written record of who has attended which training courses. This can be important evidence in the event of regulatory complaints.

Transparency is key: labelling AI-generated content

From August 2026, companies will have to label AI-generated content that people could misinterpret as authentic. This applies in particular to deepfakes, which are deceptively realistic simulations of voices or images. This may be particularly relevant in marketing, corporate communications or press relations. A practical approach is to log all AI-generated content, even for less controversial applications. That way, you won’t lose track of which images, texts or videos come from AI systems. In case of doubt, you will be prepared if the EU tightens its requirements further.

Check your contractors and service providers

Even if you are not an AI “provider” yourself, but only use an application, you may still be subject to regulatory obligations. This is especially true if you are “refining” an AI model or distributing it under your label. Agree on clear SLA (Service Level Agreements) contracts with your software suppliers and service providers, which regulate responsibilities and documentation obligations. Joint AI risk management helps to meet the requirements of the AI Regulation and avoid unpleasant surprises.

Consequences: Penalties and business risks

The EU AI lAct includes severe penalties for violations – in some cases, even higher than those in the GDPR. The fines are particularly high for prohibited AI systems, which will be banned from use from February 2025. Prohibited AI systems can be fined up to seven per cent of the previous year’s global turnover, or a maximum of €35 million. An infringement can therefore threaten a company’s existence, especially for SMEs. And even if you ‘only’ use high-risk AI systems and fail to comply with documentation or reporting requirements, you could face multi-million dollar fines. The best way to manage these risks is to take a proactive approach to compliance.

Where necessary, companies should consider bringing in external experts if their capacity or skills in AI are insufficient. The cost of such advice and training can be a good investment. Finally, fines are not just a financial threat. Reputational damage can be at least as serious – those who come under public scrutiny for using prohibited or discriminatory AI applications risk losing customer confidence and investor interest.

How to do it right: Actionable takeaways

Before you dive into in-depth analysis, start by creating a complete list of all the software and AI tools you use. In many organisations, AI is used in different places without being centrally recorded – for example, a department might use ChatGPT for marketing copy, or a recruiter might use an AI tool to screen applications.

- Map your tools: Create an overview of which departments are using which AI tools.

- Determine AI risk class: Try to make an initial classification using the categories defined in the EU AI law (unacceptable, high, limited, minimal). Online tools such as the Risk Navigator (TÜV) or the Compliance Checker (Future of Life Institute) can help.

- Document the outlook: Are you already planning new AI projects? Make a note of these too, as any future implementation will need to comply with the new law.

Between autonomy and responsibility: AI policy

An AI policy is still a rarity in many companies today. But it can be just as valuable as a social media policy. Here is what you should include in your internal policy:

- Data protection and confidentiality: what information can be entered into AI systems?

- Binding usage rules: Who can use AI and how, and what approvals may be required?

- Labelling obligations: When must it be disclosed that content is generated by AI (e.g. for marketing images)?

- Liability issues: Define processes for reporting and dealing with potential claims.

A well-structured AI policy provides clarity and can help in the event of a legal dispute, if you can prove that employees have been trained and instructed to comply with certain guidelines. This lays the foundation for a responsible, innovative and compliant AI strategy – and ensures that you can continue to operate successfully in the marketplace. Like any new regulation, the EU AI law can be seen as an opportunity: Those who meet the necessary requirements early on and build trusted AI expertise will strengthen their position with regulators and their own customers.

The EU AI Act as a wake-up call for accountable innovation

While the US is increasingly opting for an unregulated AI policy, Europe is consciously taking the path of responsible and transparent use of AI with the EU AI Act. And yes, there are challenges to this approach: the compliance requirements are complex and the administrative burden can be overwhelming for both SMEs and large corporations. But it also offers a great opportunity: those who design their AI systems to be safe and compliant from the outset will gain the trust of customers and business partners.

Key takeaways:

- Respond early: Conduct an AI audit and prepare a risk assessment.

- Develop a clear AI policy: Define binding rules for the use of AI to reduce liability and compliance risks

- Plan training: Make your team fit for AI – not only from a technical perspective, but also from a legal and ethical one.

- Maintain transparency: Keep track of AI-generated content and flag it early on.

- Balance risks and opportunities: See regulation as an opportunity to position yourself as a responsible business.

Don’t wait and see! Some regulations will not come into force until 2026, but a sufficient level of AI competence in companies is expected as early as February 2, 2025. This is when the first prohibited AI applications will be banned. Those who hesitate risk not only heavy fines, but also their competitiveness.

The EU AI Act is not just another obstacle to overcome – it is a wake-up call to lay a solid ethical and legal foundation. While other regions of the world rely on less stringent regulations, Europe’s approach offers an opportunity to lead the way in the responsible use of AI.

It’s time to act now! Strengthen your AI strategy before the first regulations take effect. Those who are prepared will benefit – those who hesitate will pay the price.

Listen to our AI-generated podcast-audio:

Here you can also download the article in German: